首页 > 基础资料 博客日记

本可避免的P1事故:Nginx变更导致网关请求均响应400

2025-07-24 20:30:02基础资料围观12974次

问题背景

项目上使用SpringCloudGateway作为网关承接公网上各个业务线进来的请求流量,在网关的前面有两台Nginx反向代理了网关,网关做了一系列的前置处理后转发请求到后面各个业务线的服务,简要的网络链路为:

网关域名(wmg.test.com) -> ... -> Nginx ->F5(硬负载域名fp.wmg.test) -> 网关 -> 业务系统

某天,负责运维Nginx的团队要增加两台新的Nginx机器,原因说来话长,按下不表,使用两台新的Nginx机器替代掉原先反向代理网关的两台Nginx。

SRE等级定性P1

一个月黑风高的夜晚,负责运维Nginx的团队进行了生产变更,在两台新机器上部署了Nginx,然后让网络团队将网关域名的流量切换到了两台新的Nginx机器上,刚切换完,立马有业务线团队的人反应,过网关的接口请求都变成400了。负责运维Nginx的团队又让网络团队将网关域名流量切回到原有的两台Nginx上,业务线过网关的接口请求恢复正常,持续了两分多钟,SRE等级定性P1。

负责运维Nginx的团队说,两台新的Nginx配置和原有的两台Nginx配置一样,看不出什么问题,找到我,让我从网关排查有没有什么错误日志。

不太可能吧,如果新的两台Nginx配置和原有的两台Nginx配置一样的话,不会出现请求都是400的问题啊,我心想,不过还是去看了网关上的日志,在那个时间段,网关没有错误日志出现。

看了下新Nginx的日志,Options请求正常返回204,其它的GET、POST请求都是400,Options是预检请求,在Nginx层面就处理返回了,新Nginx的日志示例如下:

10.x.x.x:63048 > - > 10.x.x.x:8099 > [2025-07-17T10:36:26+08:00] > 10.x.x.x:8099 OPTIONS /api/xxx HTTP/1.1 > 204 > 0 > https://domain/ > Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36 > - > [req_time:0.000 s] >[upstream_connect_time:- s]> [upstream_header_time:- s] > [upstream_resp_time:- s] [-]

10.x.x.x:63048 > - > 10.x.x.x:8099 > [2025-07-17T10:36:26+08:00] > 10.x.x.x:8099 POST /api/xxx HTTP/1.1 > 400 > 0 > https://domain/ > Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36 > - > [req_time:0.001 s] >[upstream_connect_time:0.000 s]> [upstream_header_time:0.001 s] > [upstream_resp_time:0.001 s] [10.x.x.x:8082]

去找了网络团队,从流量回溯设备上看到400确实是网关返回的,还没有到后面的业务系统,400代表BadRequest,我怀疑是不是请求体的问题,想让网络将那个时间段的流量包数据取下来分析,网络没给,只给我了业务报文参数,走网关请求的业务参数报文是加密的,我本地运行程序可以正常解密报文,我反馈给了负责运维Nginx的团队。

负责运维Nginx的团队又花了一段时间定位问题,还是没有头绪,又找到我,让我帮忙分析调查下。

介入调查

我说测试环境地址是啥,我先在测试环境看下能不能复现,负责运维Nginx的团队成员说,没有在测试环境搭建测试,这一次变更是另一个成员直接生产变更。

😓

我要来了新的Nginx配置文件和老的Nginx配置文件比对了下,发现有不一样的地方,老Nginx上反向代理网关的配置如下:

server {

listen 8080;

server_name wmg.test.com;

add_header X-Frame-Options "SAMEORIGIN";

add_header X-Content-Type-Options "nosniff";

add_header Content-Security-Policy "frame-ancestors 'self'";

location / {

proxy_hide_header host;

client_max_body_size 100m;

add_header 'Access-Control-Allow-Origin' "$http_origin" always;

add_header 'Access-Control-Allow-Credentials' 'true' always;

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS, DELETE, PUT';

add_header 'Access-Control-Allow-Headers' '...';

if ($request_method = 'OPTIONS') {

return 204;

}

proxy_pass http://fp.wmg.test:8090;

}

}

新Nginx配置如下:

upstream http_gateways{

server fp.wmg.test:8090;

keepalive 30;

}

server {

listen 8080 backlog=512;

server_name wmg.test.com;

add_header X-Frame-Options "SAMEORIGIN";

add_header X-Content-Type-Options "nosniff";

add_header Content-Security-Policy "frame-ancestors 'self'";

location / {

proxy_hide_header host;

proxy_http_version 1.1;

proxy_set_header Connection "";

client_max_body_size 100m;

add_header 'Access-Control-Allow-Origin' "$http_origin" always;

add_header 'Access-Control-Allow-Credentials' 'true' always;

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS, DELETE, PUT';

add_header 'Access-Control-Allow-Headers' '...';

if ($request_method = 'OPTIONS') {

return 204;

}

proxy_pass http://http_gateways;

}

}

新Nginx代理网关的配置与原有Nginx上的配置区别在于:

-

使用upstream配置了网关的F5负载均衡地址:

upstream http_gateways{ server fp.wmg.test:8090; keepalive 30; } -

设置http协议为1.1,启用长连接

proxy_http_version 1.1; proxy_set_header Connection "";

我让负责运维Nginx的团队在测试环境的Nginx上按照新的Nginx配置模拟了生产环境:

Nginx:10.100.8.11 监听9104端口

网关:10.100.22.48 监听8081端口

Nginx的9104端口转发到网关的8081端口,配置如下:

upstream http_gateways{

server 10.100.22.48:8081;

keepalive 30;

}

server {

listen 9104 backlog=512;

server_name localhost;

add_header X-Frame-Options "SAMEORIGIN";

add_header X-Content-Type-Options "nosniff";

add_header Content-Security-Policy "frame-ancestors 'self'";

location / {

proxy_hide_header host;

proxy_http_version 1.1;

proxy_set_header Connection "";

client_max_body_size 100m;

add_header 'Access-Control-Allow-Origin' "$http_origin" always;

add_header 'Access-Control-Allow-Credentials' 'true' always;

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS, DELETE, PUT';

add_header 'Access-Control-Allow-Headers' '...';

if ($request_method = 'OPTIONS') {

return 204;

}

proxy_pass http://http_gateways;

}

}

问题复现

通过Nginx请求网关到后端服务接口,问题复现,请求响应400:

curl -v -X GET http://10.100.8.11:9104/wechat-web/actuator/info

去掉下面的两个配置,请求正常响应200:

proxy_http_version 1.1;

proxy_set_header Connection "";

天外来锅

将这个现象反馈给了负责运维Nginx的团队,结果负责运维Nginx的团队查了半天说网关不支持长连接,要让网关改造。

😓

不应该啊,以往网关发版的时候,是滚动发版的,F5上先下掉一个机器的流量,停启这个机器上的网关服务,然后F5上流量,F5下流量的时候是有长连接存在的,每次都会等个5分钟左右才能下掉一路的流量。

得,先放下手头的工作,花点时间来证明网关是支持长连接的。

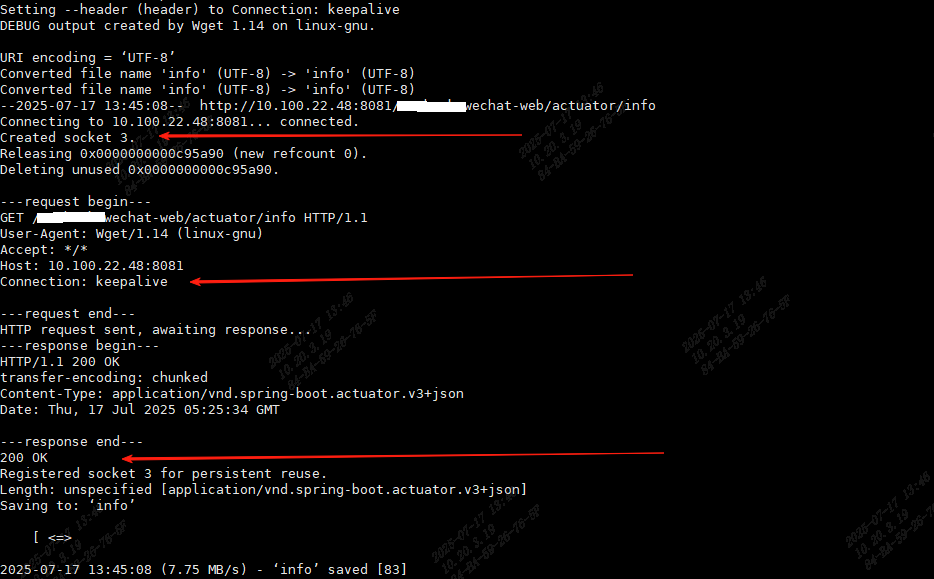

在Nginx机器上通过命令行指定长连接方式访问网关请求后端服务接口:

wget -d --header="Connection: keepalive" http://10.100.22.48:8081/wechat-web/actuator/info http://10.100.22.48:8081/wechat-web/actuator/info http://10.100.22.48:8081/wechat-web/actuator/info

回车出现如下日志:

Setting --header (header) to Connection: keepalive

DEBUG output created by Wget 1.14 on linux-gnu.

URI encoding = ‘UTF-8’

Converted file name 'info' (UTF-8) -> 'info' (UTF-8)

Converted file name 'info' (UTF-8) -> 'info' (UTF-8)

--2025-07-17 13:45:08-- http://10.100.22.48:8081/wechat-web/actuator/info

Connecting to 10.100.22.48:8081... connected.

Created socket 3.

Releasing 0x0000000000c95a90 (new refcount 0).

Deleting unused 0x0000000000c95a90.

---request begin---

GET /wechat-web/actuator/info HTTP/1.1

User-Agent: Wget/1.14 (linux-gnu)

Accept: */*

Host: 10.100.22.48:8081

Connection: keepalive

---request end---

HTTP request sent, awaiting response...

---response begin---

HTTP/1.1 200 OK

transfer-encoding: chunked

Content-Type: application/vnd.spring-boot.actuator.v3+json

Date: Thu, 17 Jul 2025 05:25:34 GMT

---response end---

200 OK

Registered socket 3 for persistent reuse.

Length: unspecified [application/vnd.spring-boot.actuator.v3+json]

Saving to: ‘info’

[ <=> ] 83 --.-K/s in 0s

2025-07-17 13:45:08 (7.75 MB/s) - ‘info’ saved [83]

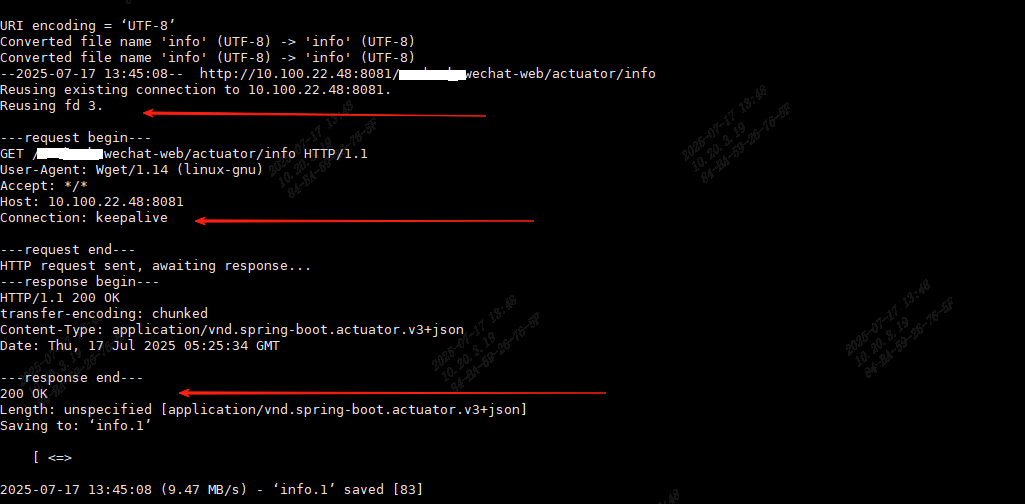

URI encoding = ‘UTF-8’

Converted file name 'info' (UTF-8) -> 'info' (UTF-8)

Converted file name 'info' (UTF-8) -> 'info' (UTF-8)

--2025-07-17 13:45:08-- http://10.100.22.48:8081/wechat-web/actuator/info

Reusing existing connection to 10.100.22.48:8081.

Reusing fd 3.

---request begin---

GET /wechat-web/actuator/info HTTP/1.1

User-Agent: Wget/1.14 (linux-gnu)

Accept: */*

Host: 10.100.22.48:8081

Connection: keepalive

---request end---

HTTP request sent, awaiting response...

---response begin---

HTTP/1.1 200 OK

transfer-encoding: chunked

Content-Type: application/vnd.spring-boot.actuator.v3+json

Date: Thu, 17 Jul 2025 05:25:34 GMT

---response end---

200 OK

Length: unspecified [application/vnd.spring-boot.actuator.v3+json]

Saving to: ‘info.1’

[ <=> ] 83 --.-K/s in 0s

2025-07-17 13:45:08 (9.47 MB/s) - ‘info.1’ saved [83]

URI encoding = ‘UTF-8’

Converted file name 'info' (UTF-8) -> 'info' (UTF-8)

Converted file name 'info' (UTF-8) -> 'info' (UTF-8)

--2025-07-17 13:45:08-- http://10.100.22.48:8081/wechat-web/actuator/info

Reusing existing connection to 10.100.22.48:8081.

Reusing fd 3.

---request begin---

GET /wechat-web/actuator/info HTTP/1.1

User-Agent: Wget/1.14 (linux-gnu)

Accept: */*

Host: 10.100.22.48:8081

Connection: keepalive

---request end---

HTTP request sent, awaiting response...

---response begin---

HTTP/1.1 200 OK

transfer-encoding: chunked

Content-Type: application/vnd.spring-boot.actuator.v3+json

Date: Thu, 17 Jul 2025 05:25:34 GMT

---response end---

200 OK

Length: unspecified [application/vnd.spring-boot.actuator.v3+json]

Saving to: ‘info.2’

[ <=> ] 83 --.-K/s in 0s

2025-07-17 13:45:08 (11.1 MB/s) - ‘info.2’ saved [83]

FINISHED --2025-07-17 13:45:08--

Total wall clock time: 0.1s

Downloaded: 3 files, 249 in 0s (9.25 MB/s)

可以看到第一个请求建立了socket 3,Connection: keepalive,请求成功,http响应状态码为200

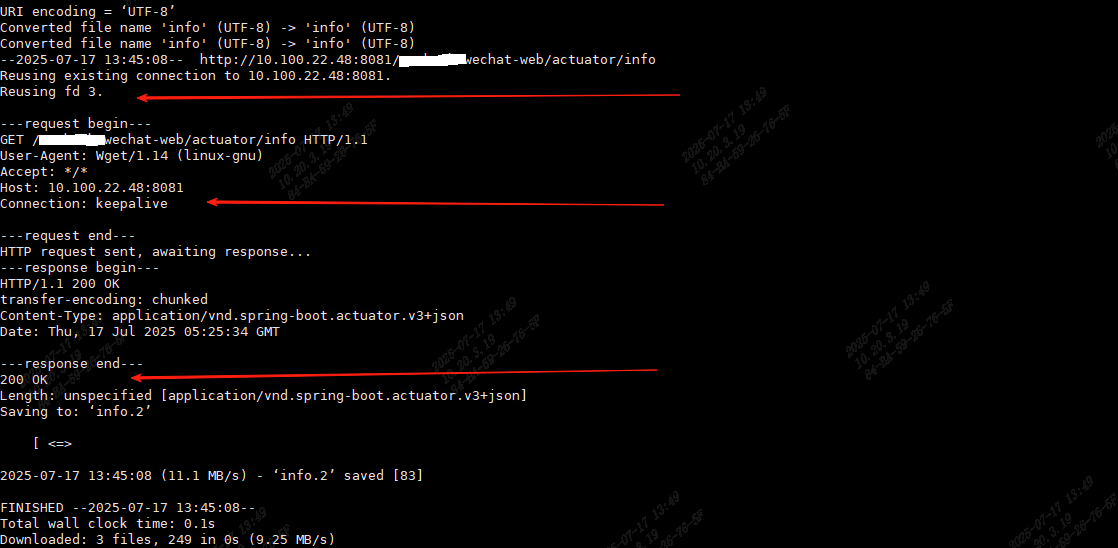

第二个请求重用了第一个连接,socket 3,Connection: keepalive,请求成功,http响应状态码为200

第三个请求依然重用了第一个连接,socket 3,Connection: keepalive,请求成功,http响应状态码为200

网关是支持长连接的,反馈给负责运维Nginx的团队,负责运维Nginx的团队又查了半天,又找到我说还是得拜托我来调查解决掉这个问题。

深度调查

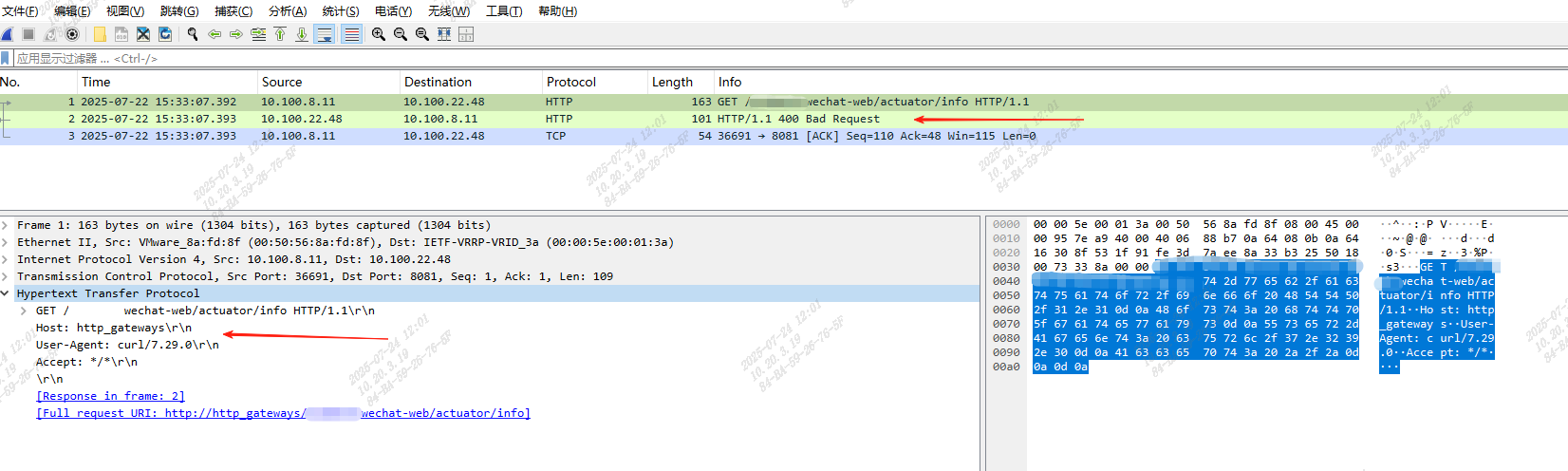

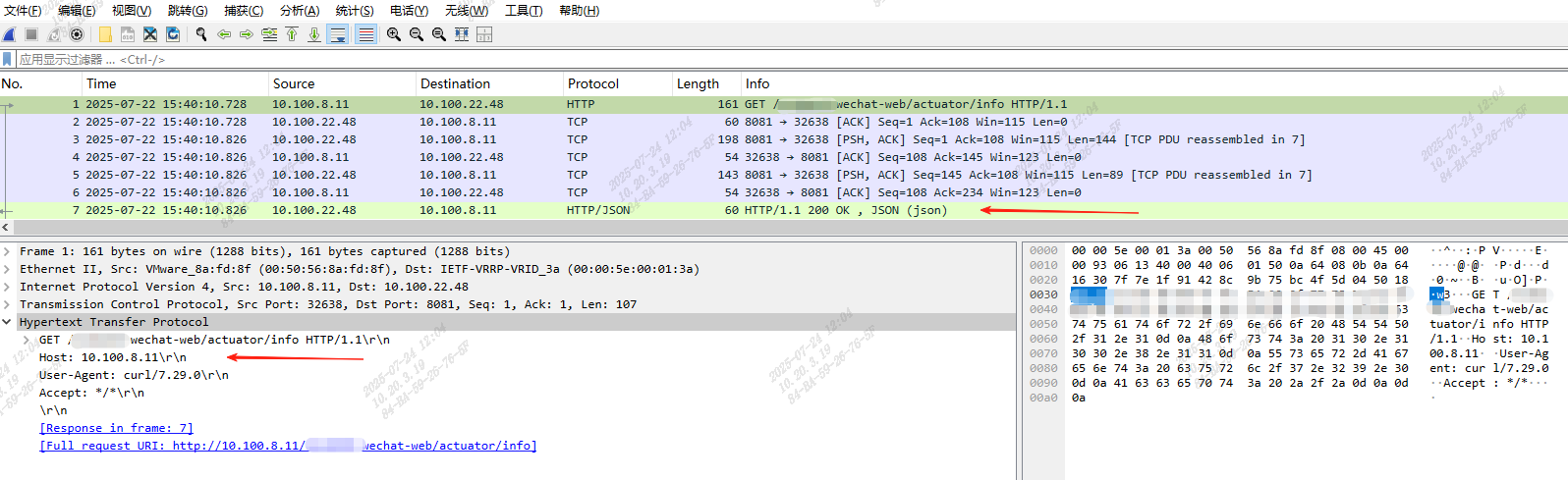

在测试环境Nginx机器10.100.8.11上使用tcpdump命令抓取与网关相关的流量包:

tcpdump -vv -i ens192 host 10.100.22.48 and tcp port 8081 -w /tmp/ng400.cap

找到出现http响应码为400的请求,可以看到流量包中的wechat-web/actuator/info请求响应为:HTTP/1.1 400 Bad Request

观察请求体,其中一个请求头Host的值为:http_gateways,这引起了我的注意:

查阅资料得到,HTTP/1.1协议规范定义HTTP/1.1版本必须传递Host请求头

- Both clients and servers MUST support the Host request-header.

- A client that sends an HTTP/1.1 request MUST send a Host header.

- Servers MUST report a 400 (Bad Request) error if an HTTP/1.1

request does not include a Host request-header.

- Servers MUST accept absolute URIs.

https://www.w3.org/Protocols/rfc2616/rfc2616-sec5.html#sec5.2

https://www.w3.org/Protocols/rfc2616/rfc2616-sec19.html#sec19.6.1.1

Host的格式可以包含:. 和 - 特殊符号,_ 不被支持

查阅Nginx的官方文档得知,proxy_set_header 有两个默认配置:

proxy_set_header Host $proxy_host;

proxy_set_header Connection close;

https://nginx.org/en/docs/http/ngx_http_proxy_module.html#proxy_set_header

可以看出Nginx启用了HTTP/1.1协议,Host如果没有指定会取$proxy_host,那么使用upstream的情况下,$proxy_host就是upstream的名称,而此处的upstream中包含_,不是合法的Host格式。

HTTP/1.1规定必须传递Host的一方面原因就是为了支持单IP地址托管多域名的虚拟主机功能,方便后端服务根据不同来源Host做不同的处理。

Older HTTP/1.0 clients assumed a one-to-one relationship of IP addresses and servers; there was no other established mechanism for distinguishing the intended server of a request than the IP address to which that request was directed. The changes outlined above will allow the Internet, once older HTTP clients are no longer common, to support multiple Web sites from a single IP address, greatly simplifying large operational Web servers, where allocation of many IP addresses to a single host has created serious problems.

那么只要遵循了HTTP/1.1协议规范的框架(Tomcat、SpringCloudGateway、...)在解析Host时发现Host不是合法的格式时,就响应了400。

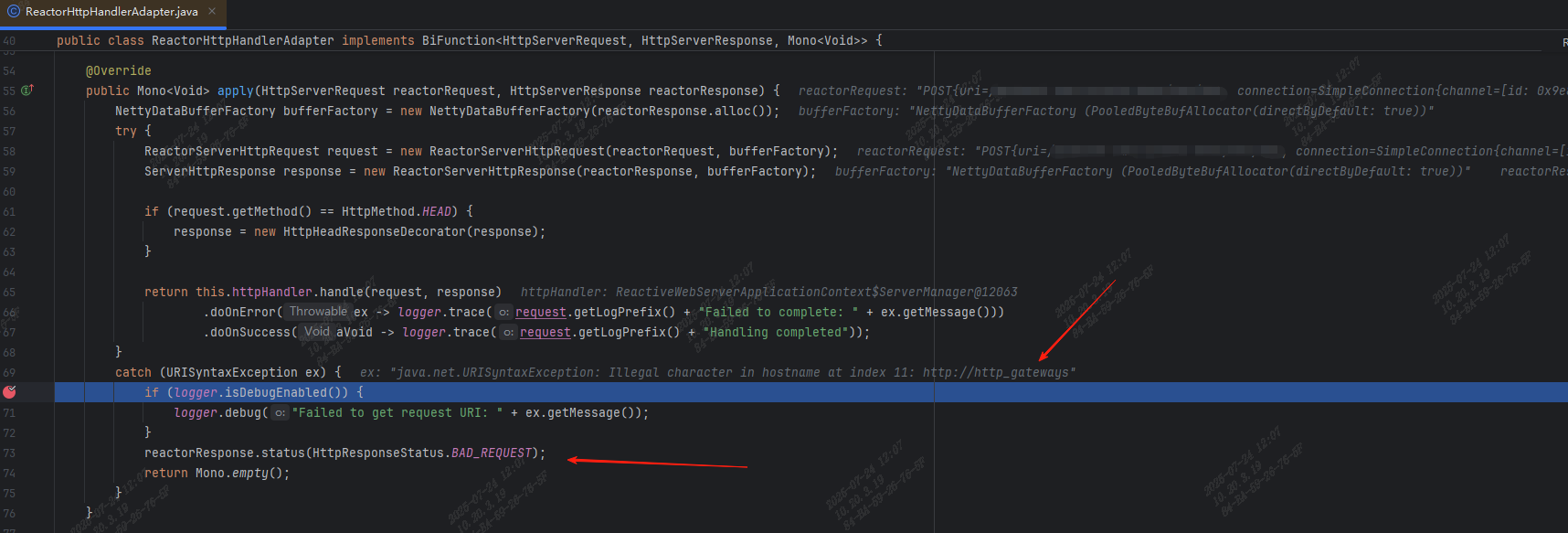

本地搭建了一个测试环境,debug了下网关的代码,在SpringCloudGateway解析http请求类ReactorHttpHandlerAdapter中的apply方法里面可以看到,解析Host失败会响应400:

下面是SpringCloudGateway解析http请求类ReactorHttpHandlerAdapter中的apply方法逻辑:

public Mono<Void> apply(HttpServerRequest reactorRequest, HttpServerResponse reactorResponse) {

NettyDataBufferFactory bufferFactory = new NettyDataBufferFactory(reactorResponse.alloc());

try {

ReactorServerHttpRequest request = new ReactorServerHttpRequest(reactorRequest, bufferFactory);

ServerHttpResponse response = new ReactorServerHttpResponse(reactorResponse, bufferFactory);

if (request.getMethod() == HttpMethod.HEAD) {

response = new HttpHeadResponseDecorator(response);

}

return this.httpHandler.handle(request, response)

.doOnError(ex -> logger.trace(request.getLogPrefix() + "Failed to complete: " + ex.getMessage()))

.doOnSuccess(aVoid -> logger.trace(request.getLogPrefix() + "Handling completed"));

}

catch (URISyntaxException ex) {

if (logger.isDebugEnabled()) {

logger.debug("Failed to get request URI: " + ex.getMessage());

}

reactorResponse.status(HttpResponseStatus.BAD_REQUEST);

return Mono.empty();

}

}

SpringCloudGateway通过debug级别日志输出这类不符合协议规范的日志,生产日志级别为info,因此不会打印这样异常的日志。

解决方案

既然HTTP/1.1协议规定必须传递Host且没有通过配置显式指定Nginx传递的Host时Nginx会有默认值,那么在Nginx的配置中增加传递Host的配置覆盖默认值的逻辑,查阅Nginx的文档,可以通过增加下面的配置解决:

proxy_set_header Host $host;

在测试环境Nginx9104端口代理配置中增加上面的配置,再次执行,请求正常响应200。

完整配置如下:

upstream http_gateways{

server 10.100.22.48:8081;

keepalive 30;

}

server {

listen 9104 backlog=512;

server_name wmg.test.com;

add_header X-Frame-Options "SAMEORIGIN";

add_header X-Content-Type-Options "nosniff";

add_header Content-Security-Policy "frame-ancestors 'self'";

location / {

proxy_set_header Host $host;

proxy_hide_header host;

proxy_http_version 1.1;

proxy_set_header Connection "";

client_max_body_size 100m;

add_header 'Access-Control-Allow-Origin' "$http_origin" always;

add_header 'Access-Control-Allow-Credentials' 'true' always;

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS, DELETE, PUT';

add_header 'Access-Control-Allow-Headers' '...';

if ($request_method = 'OPTIONS') {

return 204;

}

proxy_pass http://http_gateways;

}

}

解决方案不止一个:

- 可以修改upstream的名称,去掉不支持的_,比如更换为:http-gateways、httpgateways

- 还可以直接指定Host的值为域名(domain),proxy_set_header Host 'doamin';

总结

这个问题只要在测试环境测试下,是必现的,不属于测试case没有覆盖到的范畴,一定要重视测试流程,很多流程看似繁琐,其实都是血与泪的教训得来的。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若内容造成侵权/违法违规/事实不符,请联系邮箱:jacktools123@163.com进行投诉反馈,一经查实,立即删除!

标签: